ASUS DEBUTS AGEIA PHYSX HARDWARE - BENCHMARK REVIEW TEST

![]()

|

|

|

||||||||||||

| Posted:2006-05-08 By Zaarco Number of View:9192 |

|||||||||||||

By :Zaarco Posted:2006-05-08

ASUS Debuts AGEIA PhysX Hardware -benchmark review test

A little over a year ago, we first heard about a company called AGEIA whose goal was to bring high quality physics processing power to the desktop. Today they have succeeded in their mission. For a short while, systems with the PhysX PPU (physics processing unit) have been shipping from Dell, Alienware, and Falcon Northwest. Soon, PhysX add-in cards will be available in retail channels. Today, the very first PhysX accelerated game has been released: Tom Clancy\'s Ghost Recon Advanced Warfighter, and to top

off the excitement, ASUS has given us an exclusive look at their hardware.

AGEIA PhysX Technology and GPU Hardware benchmark review testFirst off, here is the low down on the hardware as we know it. AGEIA, being the first and only consumer-oriented physics processor designer right now, has not given us as much in-depth technical detail as other hardware designers. We certainly understand the need to protect intellectual property, especially at this stage in the game, but this is what we know.PhysX Hardware:

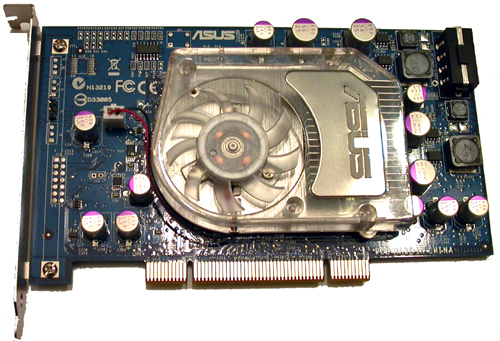

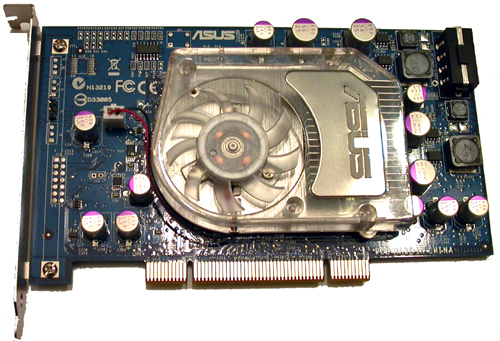

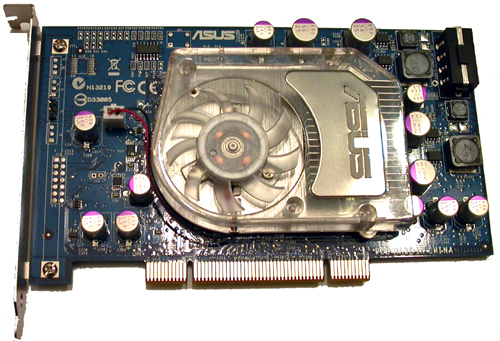

ASUS PhysX Card benchmark review testIt\'s not as dramatic as a 7900 GTX or an X1900 XTX, but here it is in all its glory. We welcome the new ASUS PhysX card to the fold:

The chip and the RAM are under the heatsink/fan, and there really isn't that much else going on here. The slot cover on the card has AGEIA PhysX written on it, and there's a 4-pin Molex connector on the back of the card for power. We're happy to report that the fan doesn't make much noise and the card doesn't get very warm (especially when compared to GPUs). We did have an occasional issue when installing the card after the drivers were already installed: after we powered up the system the first time, we couldn't use the AGEIA hardware until we hard powered our system and then booted up again. This didn't happen every time we installed the card, but it did happen more than once. This is probably not a big deal and could easily be an issue with the fact that we are using early software and early hardware. Other than that,

we would be happy to answer for your question . if you have suggestion or comment

regarding this review our support would be glad to help just join our forum and ask u will get the best answer

to discuss check our forum section :-) RATE THIS REVIEW | |||||||||||||

![]()

ASUS Debuts AGEIA PhysX Hardware - benchmark review test

ASUS Debuts AGEIA PhysX Hardware - benchmark review test

7600gt review

7600gt is the middle card range.

We already benchmarked this video card and found that ...

geforce 8800gtx and 8800gts

geforce 8800gtx and 8800gts  Xtreview software download Section

Xtreview software download Section  AMD TURION 64 X2 REVIEW

AMD TURION 64 X2 REVIEW  INTEL PENTIUM D 920 , INTEL PENTIUM D 930

INTEL PENTIUM D 920 , INTEL PENTIUM D 930  6800XT REVIEW

6800XT REVIEW  computer hardware REVIEW

computer hardware REVIEW  INTEL CONROE CORE DUO 2 REVIEW VS AMD AM2

INTEL CONROE CORE DUO 2 REVIEW VS AMD AM2  INTEL PENTIUM D 805 INTEL D805

INTEL PENTIUM D 805 INTEL D805  Free desktop wallpaper

Free desktop wallpaper  online fighting game

online fighting game  Xtreview price comparison center

Xtreview price comparison center

- The new version of GPU-Z finally kills the belief in the miracle of Vega transformation

- The motherboard manufacturer confirms the characteristics of the processors Coffee Lake

- We are looking for copper coolers on NVIDIA Volta computing accelerators

- Unofficially about Intels plans to release 300-series chipset

- The Japanese representation of AMD offered monetary compensation to the first buyers of Ryzen Threadripper

- This year will not be released more than 45 million motherboards

- TSMC denies the presentation of charges from the antimonopoly authorities

- Radeon RX Vega 64 at frequencies 1802-1000 MHz updated the record GPUPI 1B

- AMD itself would like to believe that mobile processors Ryzen have already been released

- AMD Vega 20 will find application in accelerating computations

- Pre-orders for new iPhone start next week

- Radeon RX Vega 57, 58 and 59: the wonders of transformation

- ASML starts commercial delivery of EUV-scanners

- The older Skylake processors with a free multiplier are removed from production

- Meizu will release Android-smartphone based on Helio P40

- AMD Bristol Ridge processors are also available in American retail

- The fate of Toshiba Memory can be solved to the next environment

- duo GeForce GTX 1080 Ti in GPUPI 1B at frequencies of 2480-10320 MHz

- New Kentsfield overclocking record up to 5204 MHz

- Lenovo released Android-smartphone K8

computer news computer parts review Old Forum Downloads New Forum Login Join Articles terms Hardware blog Sitemap Get Freebies