OOPS! You forgot to upload swfobject.js ! You must upload this file for your form to work.

NVIDIA Tesla V100 will be offered in the version with PCI Express 3.0 interface

![]()

|

xtreview is your : Video card - cpu - memory - Hard drive - power supply unit source |

|

|||

|

|

||||

Recommended : Free unlimited image hosting with image editor

Recommended : Free unlimited image hosting with image editor

|

POSTER: computer news || NVIDIA TESLA V100 WILL BE OFFERED IN THE VERSION WITH PCI EXPRESS 3.0 INTERFACE |

DATE:2017-06-21 |

|

|

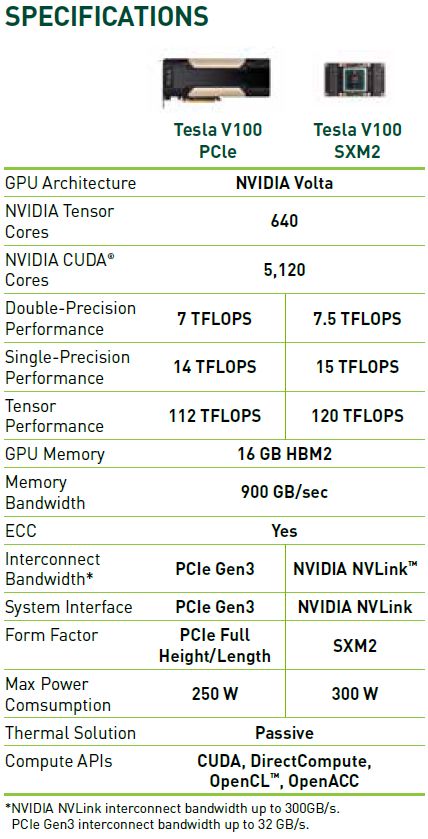

Back in mid-May we had an opportunity to look at the NVIDIA Tesla V100 accelerators in performance with PCI Express 3.0 interface from afar . In addition to the version with a TDP level of not more than 250 W, a more compact accelerator with a TDP level of not more than 150 W will be developed. Clearly, in the latter case we will have to make some compromises in terms of speed, but so far the characteristics of the last version of the Tesla V100 remain a mystery behind seven seals. But the full-size version of the Tesla V100 with PCI Express 3.0 interface was recently introduced to the general public . On the site of NVIDIA you can already find not only the image of this accelerator, which will appear in the ready systems of partners of the company before the end of the year, but also the characteristics of the novelty using 16 GB of HBM2 memory.  In this embodiment, the Tesla V100 accelerator TDP level is limited to a value of 250W. This is 50W less than the version in the SXM2 version, although the cooling system could be protected from using fans under the casing of the accelerator itself - they should be located in the server system casing, pumping air through the radiator.  Decrease in TDP was given at the cost of loss of performance - about 6.5%, and the memory remained working at the same frequencies, and only the graphics processor had to sacrifice. In addition, the option Tesla V100 with a PCI Express 3.0 interface does not support the proprietary interface NVLink, which has almost a tenfold advantage in bandwidth. One of NVIDIA partners, who will offer Tesla V100 in this embodiment, is a company HPE. Related Products : | ||

|

|

||

|

xtreview is your : Video card - cpu - memory - Hard drive - power supply unit source |

|

|

|

|

||

|

Xtreview Support  N-Post:xxxx Xtreview Support        |

NVIDIA TESLA V100 WILL BE OFFERED IN THE VERSION WITH PCI EXPRESS 3.0 INTERFACE |

| Please Feel Free to write any Comment; Thanks  |

We are looking for copper coolers on NVIDIA Volta computing accelerators (2017-09-08)

NVIDIA told about the progress of Volta in comparison with Pascal (2017-08-23)

AMD continues to demonstrate the capabilities of its processors using NVIDIA graphics cards (2017-08-23)

NVIDIA is among the top three out-of-the-box developers of microchips in terms of revenue (2017-08-17)

TSMC will increase the output of 12-nm NVIDIA products by the end of the year (2017-08-15)

THE SHARES OF NVIDIA CONTINUE TO FALL IN PRICE (2017-08-14)

THE HEAD OF NVIDIA ONCE AGAIN ANNOUNCED THE SUPERIORITY OF THE GPU OVER THE TPU (2017-08-14)

The head of NVIDIA admitted that Volta costs a lot in production (2017-08-11)

The financial optimism of NVIDIA is due to a profitable strategic position (2017-08-11)

In the second quarter, NVIDIA revenue grew 1.5 times (2017-08-11)

Over the past three months, NVIDIA has earned at least 150 million (2017-08-11)

NVIDIA promises to release a game Volta, but while calls to buy Pascal (2017-08-11)

The share price of NVIDIA shares rose in anticipation of quarter results announcement (2017-08-08)

Analysts believe in the ability of AMD Radeon RX Vega to squeeze NVIDIA products (2017-08-02)

NVIDIA TITAN Xp is now more popular with professionals (2017-08-01)

Miners could raise the revenue of AMD and NVIDIA by up to 875 million (2017-07-18)

NVIDIA GeForce GTX G-Assist an April Fools joke that revived (2017-07-18)

Experts believe in the artificial-intellectual potential of NVIDIA (2017-07-13)

The characteristics of NVIDIA Volta prove that Moores law is more likely to live (2017-07-10)

Miner solutions based on NVIDIA GP104 have already been patented in GPU-Z (2017-07-07)

![]()

To figure out your best laptops .Welcome to XTreview.com. Here u can find a complete computer hardware guide and laptop rating .More than 500 reviews of modern PC to understand the basic architecture

7600gt review

7600gt is the middle card range.

We already benchmarked this video card and found that ...

geforce 8800gtx and 8800gts

geforce 8800gtx and 8800gts  Xtreview software download Section

Xtreview software download Section  AMD TURION 64 X2 REVIEW

AMD TURION 64 X2 REVIEW  INTEL PENTIUM D 920 , INTEL PENTIUM D 930

INTEL PENTIUM D 920 , INTEL PENTIUM D 930  6800XT REVIEW

6800XT REVIEW  computer hardware REVIEW

computer hardware REVIEW  INTEL CONROE CORE DUO 2 REVIEW VS AMD AM2

INTEL CONROE CORE DUO 2 REVIEW VS AMD AM2  INTEL PENTIUM D 805 INTEL D805

INTEL PENTIUM D 805 INTEL D805  Free desktop wallpaper

Free desktop wallpaper  online fighting game

online fighting game  Xtreview price comparison center

Xtreview price comparison center Lastest 15 Reviews

Rss Feeds

Last News

- The new version of GPU-Z finally kills the belief in the miracle of Vega transformation

- The motherboard manufacturer confirms the characteristics of the processors Coffee Lake

- We are looking for copper coolers on NVIDIA Volta computing accelerators

- Unofficially about Intels plans to release 300-series chipset

- The Japanese representation of AMD offered monetary compensation to the first buyers of Ryzen Threadripper

- This year will not be released more than 45 million motherboards

- TSMC denies the presentation of charges from the antimonopoly authorities

- Radeon RX Vega 64 at frequencies 1802-1000 MHz updated the record GPUPI 1B

- AMD itself would like to believe that mobile processors Ryzen have already been released

- AMD Vega 20 will find application in accelerating computations

- Pre-orders for new iPhone start next week

- Radeon RX Vega 57, 58 and 59: the wonders of transformation

- ASML starts commercial delivery of EUV-scanners

- The older Skylake processors with a free multiplier are removed from production

- Meizu will release Android-smartphone based on Helio P40

- AMD Bristol Ridge processors are also available in American retail

- The fate of Toshiba Memory can be solved to the next environment

- duo GeForce GTX 1080 Ti in GPUPI 1B at frequencies of 2480-10320 MHz

- New Kentsfield overclocking record up to 5204 MHz

- Lenovo released Android-smartphone K8

HALO 3 HALO 3 - Final Fight!

PREY Prey is something you don t often see anymore: a totally unigue shooter experience.

computer news computer parts review Old Forum Downloads New Forum Login Join Articles terms Hardware blog Sitemap Get Freebies